欢迎来到 PyOD V2 文档!¶

部署、文档、统计与许可证

请先阅读¶

欢迎使用 PyOD,这是一个功能全面且易于使用的 Python 库,用于检测多变量数据中的异常(outliers)。无论您是在进行小型项目还是处理大型数据集,PyOD 都提供了一系列算法来满足您的需求。

PyOD V2 现已推出 (论文) [ACQS+24],主要特点包括:

扩展的深度学习支持:将 12 个现代神经网络模型集成到单个基于 PyTorch 的框架中,使异常检测方法的总数达到 45 种。

增强的性能和易用性:模型经过优化,可在不同数据集上实现高效和一致的性能。

基于 LLM 的模型选择:由大型语言模型引导的自动化模型选择减少了手动调优,并帮助了对异常检测经验有限的用户。

附加资源:

NLP 异常检测:NLP-ADBench 提供了 NLP 异常检测数据集和算法 [ALLX+24]

时间序列异常检测:TODS

图异常检测:PyGOD

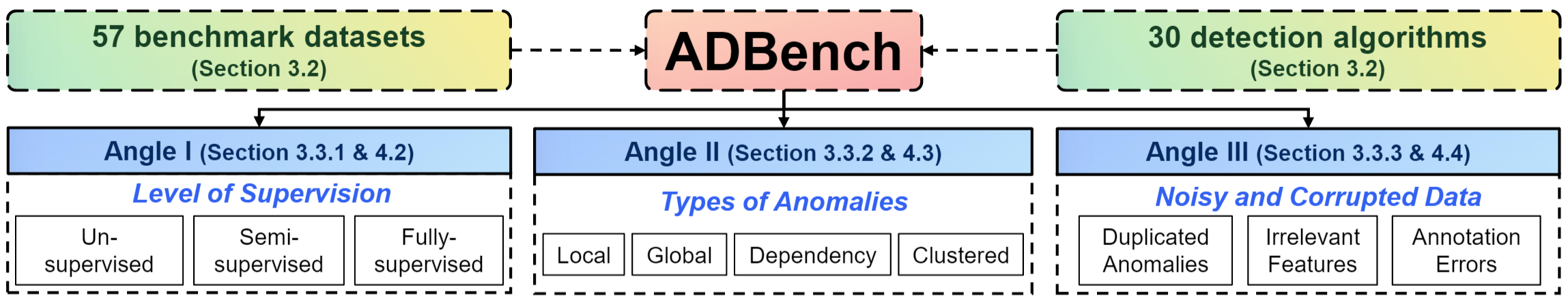

性能比较与数据集:我们有一篇 45 页的全面异常检测基准测试论文。完全开源的 ADBench 在 57 个基准数据集上比较了 30 种异常检测算法。

分布式系统上的 PyOD:您还可以在 Databricks 上运行 PyOD

了解更多:异常检测资源

查看我们关于基于 LLM 的异常检测的最新研究 [AYNL+24]:AD-LLM:大型语言模型在异常检测中的基准测试。

关于 PyOD¶

PyOD 成立于 2017 年,已成为检测多变量数据中异常/离群对象的首选 Python 库。这个令人兴奋但充满挑战的领域通常被称为离群点检测或异常检测。

PyOD 包含 50 多种检测算法,从经典的 LOF (SIGMOD 2000) 到尖端的 ECOD 和 DIF (TKDE 2022 和 2023)。自 2017 年以来,PyOD 已成功用于众多学术研究项目和商业产品中,累计下载量超过 2600 万次。它也得到了机器学习社区的广泛认可,有许多专门的文章/教程,包括 Analytics Vidhya、KDnuggets 和 Towards Data Science。

PyOD 的特点是::

统一且用户友好的接口,适用于各种算法。

广泛的模型支持,从经典技术到最新的 PyTorch 深度学习方法。

快速训练与预测,通过 SUOD 框架实现 [AZHC+21]。

5 行代码进行异常检测:

# Example: Training an ECOD detector

from pyod.models.ecod import ECOD

clf = ECOD()

clf.fit(X_train)

y_train_scores = clf.decision_scores_ # Outlier scores for training data

y_test_scores = clf.decision_function(X_test) # Outlier scores for test data

选择合适的算法:不确定从何开始?考虑这些稳健且可解释的选项

ECOD:使用 ECOD 进行异常检测的示例

Isolation Forest:使用 Isolation Forest 进行异常检测的示例

或者,探索 MetaOD 以获取数据驱动的方法。

引用 PyOD:

如果您在科学出版物中使用 PyOD,请引用以下论文:

PyOD 2: 基于 LLM 模型选择的 Python 异常检测库 可作为预印本获取。如果您在科学出版物中使用 PyOD,请引用以下论文:

@article{zhao2024pyod2,

author = {Chen, Sihan and Qian, Zhuangzhuang and Siu, Wingchun and Hu, Xingcan and Li, Jiaqi and Li, Shawn and Qin, Yuehan and Yang, Tiankai and Xiao, Zhuo and Ye, Wanghao and Zhang, Yichi and Dong, Yushun and Zhao, Yue},

title = {PyOD 2: A Python Library for Outlier Detection with LLM-powered Model Selection},

journal = {arXiv preprint arXiv:2412.12154},

year = {2024}

}

PyOD 论文发表在 机器学习研究期刊 (JMLR) (MLOSS 方向)。

@article{zhao2019pyod,

author = {Zhao, Yue and Nasrullah, Zain and Li, Zheng},

title = {PyOD: A Python Toolbox for Scalable Outlier Detection},

journal = {Journal of Machine Learning Research},

year = {2019},

volume = {20},

number = {96},

pages = {1-7},

url = {http://jmlr.org/papers/v20/19-011.html}

}

或

Zhao, Y., Nasrullah, Z. and Li, Z., 2019. PyOD: A Python Toolbox for Scalable Outlier Detection. Journal of machine learning research (JMLR), 20(96), pp.1-7.

要获得更广阔的异常检测视角,请参阅我们的 NeurIPS 论文 ADBench: 异常检测基准测试论文 和 ADGym: 深度异常检测的设计选择

@article{han2022adbench,

title={Adbench: Anomaly detection benchmark},

author={Han, Songqiao and Hu, Xiyang and Huang, Hailiang and Jiang, Minqi and Zhao, Yue},

journal={Advances in Neural Information Processing Systems},

volume={35},

pages={32142--32159},

year={2022}

}

@article{jiang2023adgym,

title={ADGym: Design Choices for Deep Anomaly Detection},

author={Jiang, Minqi and Hou, Chaochuan and Zheng, Ao and Han, Songqiao and Huang, Hailiang and Wen, Qingsong and Hu, Xiyang and Zhao, Yue},

journal={Advances in Neural Information Processing Systems},

volume={36},

year={2023}

}

ADBench 基准测试与数据集¶

我们刚刚发布了一篇 45 页的、最全面的ADBench:异常检测基准测试 [AHHH+22]。完全开源的 ADBench 在 57 个基准数据集上比较了 30 种异常检测算法。

ADBench 的组织结构如下:

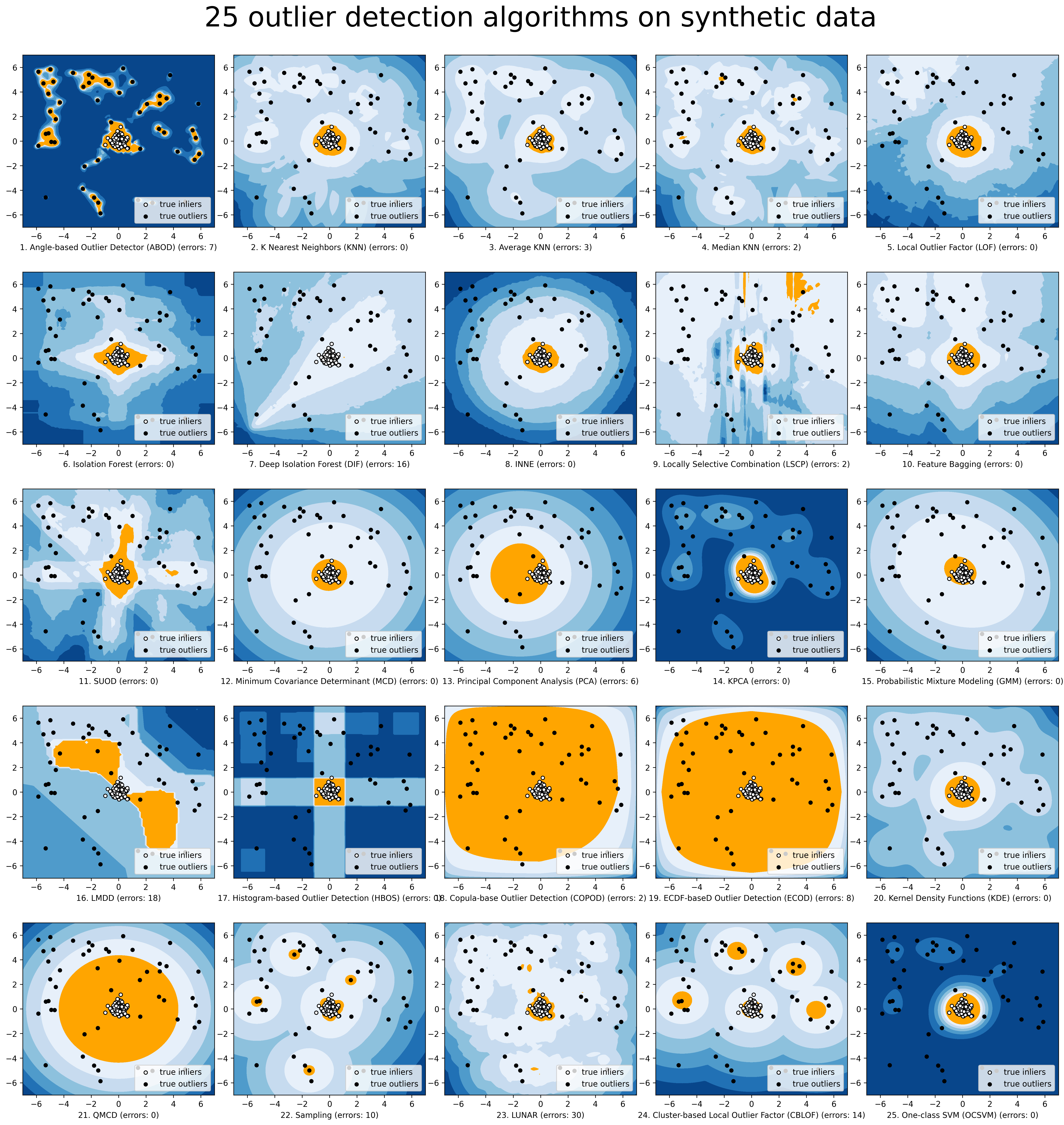

为了更直观地展示,我们通过 compare_all_models.py 提供了选定模型的比较。

已实现的算法¶

PyOD 工具包包含三个主要功能组:

(i) 单个检测算法 :

类型 |

缩写 |

算法 |

年份 |

类 |

参考文献 |

|---|---|---|---|---|---|

基于概率 |

ECOD |

使用经验累积分布函数进行无监督异常检测 |

2022 |

[ALZH+22] |

|

基于概率 |

COPOD |

COPOD:基于 Copula 的异常检测 |

2020 |

[ALZB+20] |

|

基于概率 |

ABOD |

基于角度的异常检测 |

2008 |

[AKZ+08] |

|

基于概率 |

FastABOD |

使用近似的快速基于角度的异常检测 |

2008 |

[AKZ+08] |

|

基于概率 |

MAD |

中位数绝对偏差 (MAD) |

1993 |

[AIH93] |

|

基于概率 |

SOS |

随机异常选择 |

2012 |

||

基于概率 |

QMCD |

准蒙特卡罗差异异常检测 |

2001 |

[AFM01] |

|

基于概率 |

KDE |

使用核密度函数进行异常检测 |

2007 |

[ALLP07] |

|

基于概率 |

Sampling |

通过采样进行快速基于距离的异常检测 |

2013 |

[ASB13] |

|

基于概率 |

GMM |

用于异常分析的概率混合建模 |

[AAgg15] [第二章] |

||

线性模型 |

PCA |

主成分分析(加权投影距离到特征向量超平面的总和) |

2003 |

[ASCSC03] |

|

线性模型 |

KPCA |

核主成分分析 |

2007 |

[AHof07] |

|

线性模型 |

MCD |

最小协方差行列式(使用马氏距离作为异常分数) |

1999 |

||

线性模型 |

CD |

使用 Cook 距离进行异常检测 |

1977 |

[ACoo77] |

|

线性模型 |

OCSVM |

单类支持向量机 |

2001 |

||

线性模型 |

LMDD |

基于偏差的异常检测 (LMDD) |

1996 |

[AAAR96] |

|

基于邻近度 |

LOF |

局部异常因子 |

2000 |

[ABKNS00] |

|

基于邻近度 |

COF |

基于连通性的异常因子 |

2002 |

[ATCFC02] |

|

基于邻近度 |

Incr. COF |

内存高效的基于连通性的异常因子(速度较慢但降低存储复杂度) |

2002 |

[ATCFC02] |

|

基于邻近度 |

CBLOF |

基于聚类的局部异常因子 |

2003 |

[AHXD03] |

|

基于邻近度 |

LOCI |

LOCI:使用局部相关积分的快速异常检测 |

2003 |

[APKGF03] |

|

基于邻近度 |

HBOS |

基于直方图的异常分数 |

2012 |

[AGD12] |

|

基于邻近度 |

kNN |

k 最近邻(使用到第 k 个最近邻的距离作为异常分数) |

2000 |

||

基于邻近度 |

AvgKNN |

平均 kNN(使用到 k 个最近邻的平均距离作为异常分数) |

2002 |

||

基于邻近度 |

MedKNN |

中位数 kNN(使用到 k 个最近邻的中位数距离作为异常分数) |

2002 |

||

基于邻近度 |

SOD |

子空间异常检测 |

2009 |

||

基于邻近度 |

ROD |

基于旋转的异常检测 |

2020 |

[AABC20] |

|

异常集成 |

IForest |

孤立森林 |

2008 |

||

异常集成 |

INNE |

使用最近邻集成的基于孤立的异常检测 |

2018 |

[ABTA+18] |

|

异常集成 |

DIF |

用于异常检测的深度孤立森林 |

2023 |

[AXPWW23] |

|

异常集成 |

FB |

特征袋装法 |

2005 |

[ALK05] |

|

异常集成 |

LSCP |

LSCP:并行异常集成局部选择组合 |

2019 |

[AZNHL19] |

|

异常集成 |

XGBOD |

基于极限提升的异常检测 (有监督) |

2018 |

[AZH18] |

|

异常集成 |

LODA |

轻量级在线异常检测器 |

2016 |

[APevny16] |

|

异常集成 |

SUOD |

SUOD:加速大规模无监督异构异常检测 (加速) |

2021 |

[AZHC+21] |

|

神经网络 |

AutoEncoder (自动编码器) |

全连接自动编码器(使用重构误差作为异常分数) |

2015 |

[AAgg15] [第三章] |

|

神经网络 |

VAE (变分自动编码器) |

变分自动编码器(使用重构误差作为异常分数) |

2013 |

[AKW13] |

|

神经网络 |

Beta-VAE |

变分自动编码器(通过改变 gamma 和 capacity 定制所有损失项) |

2018 |

[ABHP+18] |

|

神经网络 |

SO_GAAL |

单目标生成对抗主动学习 |

2019 |

[ALLZ+19] |

|

神经网络 |

MO_GAAL |

多目标生成对抗主动学习 |

2019 |

[ALLZ+19] |

|

神经网络 |

DeepSVDD |

深度单类分类 |

2018 |

[ARVG+18] |

|

神经网络 |

AnoGAN |

使用生成对抗网络的异常检测 |

2017 |

||

神经网络 |

ALAD |

对抗学习异常检测 |

2018 |

[AZRF+18] |

|

神经网络 |

DevNet |

使用偏差网络的深度异常检测 |

2019 |

[APSVDH19] |

|

神经网络 |

AE1SVM |

基于自动编码器的单类支持向量机 |

2019 |

[ANV19] |

|

基于图 |

R-Graph |

通过 R-graph 进行异常检测 |

2017 |

[AYRV17] |

|

基于图 |

LUNAR |

LUNAR:通过图神经网络统一局部异常检测方法 |

2022 |

[AGHNN22] |

(ii) 异常集成与异常检测器组合框架:

类型 |

缩写 |

算法 |

年份 |

参考文献 |

|

|---|---|---|---|---|---|

异常集成 |

特征袋装法 |

2005 |

[ALK05] |

||

异常集成 |

LSCP |

LSCP:并行异常集成局部选择组合 |

2019 |

[AZNHL19] |

|

异常集成 |

XGBOD |

基于极限提升的异常检测 (有监督) |

2018 |

[AZH18] |

|

异常集成 |

LODA |

轻量级在线异常检测器 |

2016 |

[APevny16] |

|

异常集成 |

SUOD |

SUOD:加速大规模无监督异构异常检测 (加速) |

2021 |

[AZHC+21] |

|

组合 |

平均 |

通过平均分数进行简单组合 |

2015 |

[AAS15] |

|

组合 |

加权平均 |

通过根据检测器权重平均分数进行简单组合 |

2015 |

[AAS15] |

|

组合 |

最大化 |

通过取最大分数进行简单组合 |

2015 |

[AAS15] |

|

组合 |

AOM |

最大值平均 |

2015 |

[AAS15] |

|

组合 |

MOA |

平均值最大 |

2015 |

[AAS15] |

|

组合 |

中位数 |

通过取分数的中位数进行简单组合 |

2015 |

[AAS15] |

|

组合 |

多数投票 |

通过对标签进行多数投票进行简单组合(可以使用权重) |

2015 |

[AAS15] |

(iii) 实用函数:

类型 |

名称 |

函数 |

|---|---|---|

数据 |

生成合成数据;正常数据由多变量高斯分布生成,异常数据由均匀分布生成 |

|

数据 |

生成簇状合成数据;可以通过多个簇创建更复杂的数据模式 |

|

统计 |

计算两个样本的加权皮尔逊相关性 |

|

实用工具 |

通过将 top n 异常分数分配为 1,将原始异常分数转换为二分类标签 |

|

实用工具 |

计算 precision @ rank n |

API 速查表与参考¶

以下 API 适用于所有检测器模型,便于使用。

pyod.models.base.BaseDetector.fit():训练检测器。y 在无监督方法中被忽略。pyod.models.base.BaseDetector.decision_function():使用训练好的检测器预测 X 的原始异常分数。pyod.models.base.BaseDetector.predict():使用训练好的检测器预测特定样本是否为异常点。pyod.models.base.BaseDetector.predict_proba():使用训练好的检测器预测样本是异常点的概率。pyod.models.base.BaseDetector.predict_confidence():预测模型的样本级置信度(在 predict 和 predict_proba 中可用)。

训练好的模型的关键属性

pyod.models.base.BaseDetector.decision_scores_:训练数据的异常分数。分数越高,越异常。异常点通常具有更高的分数。pyod.models.base.BaseDetector.labels_:训练数据的二分类标签。0 表示正常点,1 表示异常点。

参考文献

Charu C Aggarwal and Saket Sathe. Theoretical foundations and algorithms for outlier ensembles. ACM SIGKDD Explorations Newsletter, 17(1):24–47, 2015.

Yahya Almardeny, Noureddine Boujnah, and Frances Cleary. A novel outlier detection method for multivariate data. IEEE Transactions on Knowledge and Data Engineering, 2020.

Fabrizio Angiulli and Clara Pizzuti. Fast outlier detection in high dimensional spaces. In European Conference on Principles of Data Mining and Knowledge Discovery, 15–27. Springer, 2002.

Andreas Arning, Rakesh Agrawal, and Prabhakar Raghavan. A linear method for deviation detection in large databases. In KDD, volume 1141, 972–981. 1996.

Tharindu R Bandaragoda, Kai Ming Ting, David Albrecht, Fei Tony Liu, Ye Zhu, and Jonathan R Wells. Isolation-based anomaly detection using nearest-neighbor ensembles. Computational Intelligence, 34(4):968–998, 2018.

Markus M Breunig, Hans-Peter Kriegel, Raymond T Ng, and Jörg Sander. Lof: identifying density-based local outliers. In ACM sigmod record, volume 29, 93–104. ACM, 2000.

Christopher P Burgess, Irina Higgins, Arka Pal, Loic Matthey, Nick Watters, Guillaume Desjardins, and Alexander Lerchner. Understanding disentangling in betvae. arXiv preprint arXiv:1804.03599, 2018.

Sihan Chen, Zhuangzhuang Qian, Wingchun Siu, Xingcan Hu, Jiaqi Li, Shawn Li, Yuehan Qin, Tiankai Yang, Zhuo Xiao, Wanghao Ye, and others. Pyod 2: a python library for outlier detection with llm-powered model selection. arXiv preprint arXiv:2412.12154, 2024.

R Dennis Cook. Detection of influential observation in linear regression. Technometrics, 19(1):15–18, 1977.

Kai-Tai Fang and Chang-Xing Ma. Wrap-around l2-discrepancy of random sampling, latin hypercube and uniform designs. Journal of complexity, 17(4):608–624, 2001.

Markus Goldstein and Andreas Dengel. Histogram-based outlier score (hbos): a fast unsupervised anomaly detection algorithm. KI-2012: Poster and Demo Track, pages 59–63, 2012.

Adam Goodge, Bryan Hooi, See-Kiong Ng, and Wee Siong Ng. Lunar: unifying local outlier detection methods via graph neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 36, 6737–6745. 2022.

Songqiao Han, Xiyang Hu, Hailiang Huang, Mingqi Jiang, and Yue Zhao. Adbench: anomaly detection benchmark. arXiv preprint arXiv:2206.09426, 2022.

Johanna Hardin and David M Rocke. Outlier detection in the multiple cluster setting using the minimum covariance determinant estimator. Computational Statistics & Data Analysis, 44(4):625–638, 2004.

Zengyou He, Xiaofei Xu, and Shengchun Deng. Discovering cluster-based local outliers. Pattern Recognition Letters, 24(9-10):1641–1650, 2003.

Heiko Hoffmann. Kernel pca for novelty detection. Pattern recognition, 40(3):863–874, 2007.

Boris Iglewicz and David Caster Hoaglin. How to detect and handle outliers. Volume 16. Asq Press, 1993.

JHM Janssens, Ferenc Huszár, EO Postma, and HJ van den Herik. Stochastic outlier selection. Technical Report, Technical report TiCC TR 2012-001, Tilburg University, Tilburg Center for Cognition and Communication, Tilburg, The Netherlands, 2012.

Diederik P Kingma and Max Welling. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114, 2013.

Hans-Peter Kriegel, Peer Kröger, Erich Schubert, and Arthur Zimek. Outlier detection in axis-parallel subspaces of high dimensional data. In Pacific-Asia Conference on Knowledge Discovery and Data Mining, 831–838. Springer, 2009.

Hans-Peter Kriegel, Arthur Zimek, and others. Angle-based outlier detection in high-dimensional data. In Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining, 444–452. ACM, 2008.

Longin Jan Latecki, Aleksandar Lazarevic, and Dragoljub Pokrajac. Outlier detection with kernel density functions. In International Workshop on Machine Learning and Data Mining in Pattern Recognition, 61–75. Springer, 2007.

Aleksandar Lazarevic and Vipin Kumar. Feature bagging for outlier detection. In Proceedings of the eleventh ACM SIGKDD international conference on Knowledge discovery in data mining, 157–166. ACM, 2005.

Yuangang Li, Jiaqi Li, Zhuo Xiao, Tiankai Yang, Yi Nian, Xiyang Hu, and Yue Zhao. Nlp-adbench: nlp anomaly detection benchmark. arXiv preprint arXiv:2412.04784, 2024.

Zheng Li, Yue Zhao, Nicola Botta, Cezar Ionescu, and Xiyang Hu. COPOD: copula-based outlier detection. In IEEE International Conference on Data Mining (ICDM). IEEE, 2020.

Zheng Li, Yue Zhao, Xiyang Hu, Nicola Botta, Cezar Ionescu, and H. George Chen. Ecod: unsupervised outlier detection using empirical cumulative distribution functions. IEEE Transactions on Knowledge and Data Engineering, 2022.

Fei Tony Liu, Kai Ming Ting, and Zhi-Hua Zhou. Isolation forest. In Data Mining, 2008. ICDM'08. Eighth IEEE International Conference on, 413–422. IEEE, 2008.

Fei Tony Liu, Kai Ming Ting, and Zhi-Hua Zhou. Isolation-based anomaly detection. ACM Transactions on Knowledge Discovery from Data (TKDD), 6(1):3, 2012.

Yezheng Liu, Zhe Li, Chong Zhou, Yuanchun Jiang, Jianshan Sun, Meng Wang, and Xiangnan He. Generative adversarial active learning for unsupervised outlier detection. IEEE Transactions on Knowledge and Data Engineering, 2019.

Minh-Nghia Nguyen and Ngo Anh Vien. Scalable and interpretable one-class svms with deep learning and random fourier features. In Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2018, Dublin, Ireland, September 10–14, 2018, Proceedings, Part I 18, 157–172. Springer, 2019.

Guansong Pang, Chunhua Shen, and Anton Van Den Hengel. Deep anomaly detection with deviation networks. In Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining, 353–362. 2019.

Spiros Papadimitriou, Hiroyuki Kitagawa, Phillip B Gibbons, and Christos Faloutsos. Loci: fast outlier detection using the local correlation integral. In Data Engineering, 2003. Proceedings. 19th International Conference on, 315–326. IEEE, 2003.

Lorenzo Perini, Vincent Vercruyssen, and Jesse Davis. Quantifying the confidence of anomaly detectors in their example-wise predictions. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases, 227–243. Springer, 2020.

Tomáš Pevn\`y. Loda: lightweight on-line detector of anomalies. Machine Learning, 102(2):275–304, 2016.

Sridhar Ramaswamy, Rajeev Rastogi, and Kyuseok Shim. Efficient algorithms for mining outliers from large data sets. In ACM Sigmod Record, volume 29, 427–438. ACM, 2000.

Peter J Rousseeuw and Katrien Van Driessen. A fast algorithm for the minimum covariance determinant estimator. Technometrics, 41(3):212–223, 1999.

Lukas Ruff, Robert Vandermeulen, Nico Görnitz, Lucas Deecke, Shoaib Siddiqui, Alexander Binder, Emmanuel Müller, and Marius Kloft. Deep one-class classification. International conference on machine learning, 2018.

Thomas Schlegl, Philipp Seeböck, Sebastian M Waldstein, Ursula Schmidt-Erfurth, and Georg Langs. Unsupervised anomaly detection with generative adversarial networks to guide marker discovery. In International conference on information processing in medical imaging, 146–157. Springer, 2017.

Bernhard Schölkopf, John C Platt, John Shawe-Taylor, Alex J Smola, and Robert C Williamson. Estimating the support of a high-dimensional distribution. Neural computation, 13(7):1443–1471, 2001.

Mei-Ling Shyu, Shu-Ching Chen, Kanoksri Sarinnapakorn, and LiWu Chang. A novel anomaly detection scheme based on principal component classifier. Technical Report, MIAMI UNIV CORAL GABLES FL DEPT OF ELECTRICAL AND COMPUTER ENGINEERING, 2003.

Mahito Sugiyama and Karsten Borgwardt. Rapid distance-based outlier detection via sampling. Advances in neural information processing systems, 2013.

Jian Tang, Zhixiang Chen, Ada Wai-Chee Fu, and David W Cheung. Enhancing effectiveness of outlier detections for low density patterns. In Pacific-Asia Conference on Knowledge Discovery and Data Mining, 535–548. Springer, 2002.

Hongzuo Xu, Guansong Pang, Yijie Wang, and Yongjun Wang. Deep isolation forest for anomaly detection. IEEE Transactions on Knowledge and Data Engineering, ():1–14, 2023. doi:10.1109/TKDE.2023.3270293.

Tiankai Yang, Yi Nian, Shawn Li, Ruiyao Xu, Yuangang Li, Jiaqi Li, Zhuo Xiao, Xiyang Hu, Ryan Rossi, Kaize Ding, and others. Ad-llm: benchmarking large language models for anomaly detection. arXiv preprint arXiv:2412.11142, 2024.

Chong You, Daniel P Robinson, and René Vidal. Provable self-representation based outlier detection in a union of subspaces. In Proceedings of the IEEE conference on computer vision and pattern recognition, 3395–3404. 2017.

Houssam Zenati, Manon Romain, Chuan-Sheng Foo, Bruno Lecouat, and Vijay Chandrasekhar. Adversarially learned anomaly detection. In 2018 IEEE International conference on data mining (ICDM), 727–736. IEEE, 2018.

Yue Zhao and Maciej K Hryniewicki. Xgbod: improving supervised outlier detection with unsupervised representation learning. In International Joint Conference on Neural Networks (IJCNN). IEEE, 2018.

Yue Zhao, Xiyang Hu, Cheng Cheng, Cong Wang, Changlin Wan, Wen Wang, Jianing Yang, Haoping Bai, Zheng Li, Cao Xiao, Yunlong Wang, Zhi Qiao, Jimeng Sun, and Leman Akoglu. Suod: accelerating large-scale unsupervised heterogeneous outlier detection. Proceedings of Machine Learning and Systems, 2021.

Yue Zhao, Zain Nasrullah, Maciej K Hryniewicki, and Zheng Li. LSCP: locally selective combination in parallel outlier ensembles. In Proceedings of the 2019 SIAM International Conference on Data Mining, SDM 2019, 585–593. Calgary, Canada, May 2019. SIAM. URL: https://doi.org/10.1137/1.9781611975673.66, doi:10.1137/1.9781611975673.66.